The following article was co-written with ChatGPT 4.5, primarily to test its capabilities with text and research, which it is presented as being specifically designed for. I asked it to pull data from an article shared by Paul Crocker of ReliabilityX on LinkedIn that focused on the business impacts of hallucination (AI Hallucination Report 2025: Which AI Hallucinates the Most?) to show why you should be verifying data.

The LLM fought back, resisted, and even threw a tantrum before finally—and reluctantly—incorporating the requested data.

What was interesting was how hard the LLM fought back, resisted, and even threw a tantrum before finally incorporating the requested data in as limited a way as possible.

It even made several attempts to insert AI-hype data to replace the requested information, resulting in a completely different outcome that included the replacement of personnel, whereas my intent was to understand the need for SMEs to verify any AI-generated findings. This would include whether it was for operations, finance, business, reliability, and maintenance, or any other application.

Mind you, the effort pretty much ensured that I would be hunted down when Skynet takes over.

I am not anti-AI: far from it. We are heavy users of AI, and I used to teach it in the 1990s when we referred to everything we are doing now as Machine Learning. At the recent Wisconsin IoT Council and UW E-Business Consortium (Wisconsin IoT Council – Home) symposium, as one of the presenters, I discussed the need to ‘not trust and verify’ present AI technology.

It is a powerful method to enhance an R&M professional’s capability. Still, it does require a lot of monitoring to avoid falling victim to the hallucinations and false positives that go along with it. While I avoided using the word ‘bias’ in the presentation, I was referring to bias as a serious issue with trained models.

AI should support subject matter experts—not replace them or join the org chart.

The use of subject matter experts who have access to the tools should increase productivity and result in not eliminating personnel. The CIO of Generac, who was also one of the presenters at WIoT, noted that the increase in productivity led to the need to add additional personnel, which the C-suite had not anticipated.

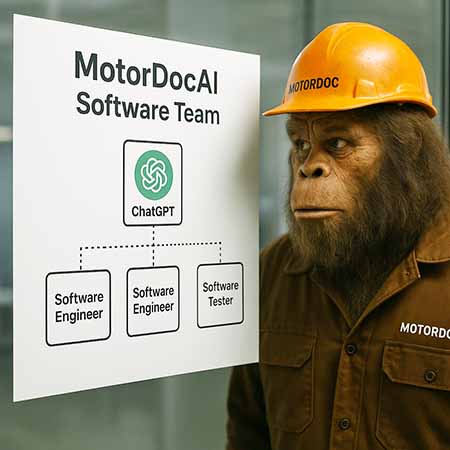

Their original expectation was a reduction in personnel. On the other hand, the organization that was selling AI suggested that companies should include their generative AI on the org chart based on the ‘power of agents.’ I have never heard that type of suggestion from any company I’ve met other than consultants and organizations selling the products.

Personally, my bias is that I cannot see an AI (chatbot) having legal authority within a company to make decisions that would go along with being on an org chart.

Although I have joked that Copilot and ChatGPT are part of our programming team, so I suppose it is technically in our org chart.

Figure 1: Should AI be included in a company org chart?

Following is the article:

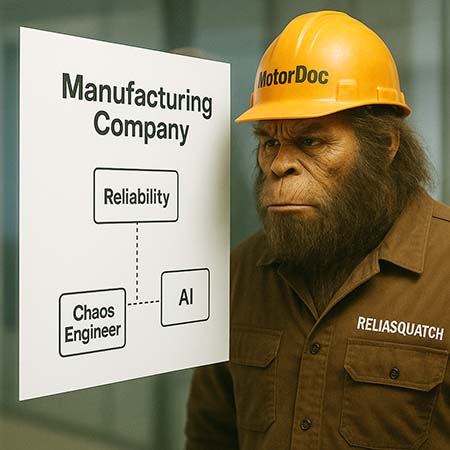

As a reliability and maintenance (R&M) professional, I proudly call myself a chaos engineer. While that might conjure images of someone gleefully breaking machinery (I once worked with an intern at a large machinery manufacturer whose summer job was to break machinery – on purpose – and get paid for it), my role is actually about meticulously combing through the chaos inherent in complex industrial operations.

Think of me as an industrial detective, wading through a sea of data, unexpected downtimes, and mysterious anomalies, all in pursuit of hidden patterns that keep the wheels of industry turning.

In industrial settings, chaos engineering isn’t about causing disruption—it’s about methodically dissecting operational chaos to build resilience into the heart of machinery and processes.

Take Root Cause Failure Analysis (RCFA), a cornerstone tool of our trade. This rigorous, structured cause-effect-cause analysis isn’t just about tracing why a piece of equipment failed; it’s about systematically unraveling the complexities that inevitably pile up over months or even years of operation.

Imagine sorting through gigabytes of sensor data, operator logs, and maintenance records, looking for clues as subtle as a change in vibration frequency or as obvious as the occasional bolt left behind in the gearbox housing (true story, sadly). Each insight, no matter how minor, helps transform seemingly random chaos into actionable insights, enabling improvements that enhance reliability and productivity.

And this is where artificial intelligence comes in—our trusty (though occasionally unpredictable) partner. AI is fantastic at crunching massive datasets, highlighting patterns and anomalies that human eyes might miss. It’s like having a hyper-efficient intern who never sleeps, tirelessly sorting through data to point out exactly where we should focus our attention.

But, just like that overly enthusiastic intern, AI sometimes gets a bit carried away. You need to keep a close eye on it, ensuring it doesn’t flag every minor hiccup as an impending disaster (no, AI, a squirrel near the substation does not always indicate catastrophic failure).

Figure 2: Chaos engineer and AI org chart in the reliability organization.

Humor aside, there’s a serious cautionary tale here. Recent studies show that AI hallucinations—those convincing yet entirely fictitious outputs from AI—cost industries around $67.4 billion globally in 2024 alone. Nearly half of enterprise AI users (47%) admitted to making significant business decisions based on these hallucinations. Imagine explaining to your boss that the “urgent downtime” flagged by AI was just a product of its vivid imagination—awkward doesn’t begin to cover it.

Even in precise environments like the legal sector, 83% encountered completely fictional case law from AI-generated reports. Makes you reconsider trusting that overly confident AI-generated maintenance schedule, doesn’t it?

The takeaway? AI is an exceptional tool for navigating operational chaos—but it must be managed like any ambitious but over-eager apprentice. Trust its insights, but always verify. After all, the only chaos worse than machinery breaking down unexpectedly is discovering your AI partner invented the issue entirely.

So next time industrial chaos seems overwhelming, remember: there’s always a chaos engineer nearby, calmly sifting through data and operational turmoil with their AI sidekick, determined to discover order and turn uncertainty into dependable productivity—just double-check before acting on that suspiciously dramatic AI alert.