Augmented Intelligence, Not Artificial Wisdom

Artificial Intelligence (AI) is becoming the next frontier in physical asset management. From predicting failure to optimizing work execution, it offers massive potential. But here’s the caution: AI is not a magic wand. It’s a pattern detector, not a problem solver. It lacks the contextual intelligence, field experience, and failure mode understanding that only subject matter experts bring to the table.

AI can support good decisions. But it can also amplify bad ones, at scale. And that’s why it requires an expert eye.

The False Comfort of Algorithms

Let’s start with the obvious. AI doesn’t “understand” physical assets. It correlates inputs and outcomes. It’s blind to failure mechanisms, material fatigue, lubricity, and other tribological realities. It will flag noise as a signal and overlook emerging issues unless it’s taught what to look for—and validated by someone who understands what matters.

It looks intelligent. But without expert framing, it’s a parrot with a calculator.

Hallucinations—When AI Makes Things Up

One of the most dangerous—and least discussed—risks with AI is hallucination: when the model generates outputs that are factually wrong but seem authoritative. These hallucinations don’t just occur in text generation. In the asset world, they show up as false correlations, faulty work order recommendations, or even invented failure modes that no competent engineer would recognize.

Imagine an AI system recommending a vibration-based intervention for a piece of equipment that doesn’t rotate. Or suggesting a temperature threshold that no manufacturer ever published. It’s not lying—it’s interpolating from patterns in data it doesn’t fully understand.

AI doesn’t know when it doesn’t know—and that’s what makes it dangerous.

In one case I reviewed, an AI tool incorrectly linked filter clogging on hydraulic systems to ambient humidity—ignoring known contaminants in the oil circuit. Had the recommendation been blindly followed, the root cause would have remained unresolved, and the failure would have repeated.

The takeaway? AI doesn’t know when it doesn’t know. It can’t signal uncertainty the way a human apprentice might. That’s why hallucinations are so dangerous—they’re confidently wrong. Without expert review, they get mistaken for insight.

AI Needs an Expert Eye—Always

Artificial Intelligence is a powerful enabler in the world of physical asset management, but let’s not kid ourselves—it’s not a substitute for human expertise. As this comparison makes clear, AI excels at correlating inputs and spotting patterns. Still, it lacks an understanding of failure mechanisms, tribological behavior, and material science—the things that matter in the real world.

It can support decisions, yes, but without field-proven context, it risks amplifying errors. AI automates data collection and delivers microlearning, but it doesn’t know what good looks like. That’s where human experts come in.

We separate signal from noise, interpret uncertainty, and apply critical thinking. We build playbooks, shape skills, govern MRO strategy, and make the tough, nuanced calls that AI can’t. In short, AI is a pattern recognition powerhouse—but it still needs an expert eye to translate patterns into precision.

Figure 1. Augmenting experts with AI produces a significant synergy.

Garbage In, Garbage Out: Why Data Quality—and Master Data—Matter More Than Ever

If AI is the engine of modern asset management, then data is the fuel—and bad fuel ruins good engines. No matter how sophisticated your AI models are, they can’t overcome poor data quality. Dirty, incomplete, or misaligned data will cause AI to amplify the wrong signals, recommend the wrong interventions, and erode trust in the system. In this context, master data isn’t just a technical detail—it’s a strategic asset.

Dirty data turns AI from asset to liability.

High-value AI applications in asset management—whether it’s predictive maintenance, MRO optimization, or skills-based task guidance—depend on a clean, structured, and complete dataset. That starts with accurate master data: asset hierarchies aligned to ISO 14224 or similar frameworks, correct equipment IDs, location structures, consistent naming conventions, and linked bills of materials.

If the system can’t distinguish between two similar pumps—or doesn’t know what spare parts they require—it can’t deliver intelligent recommendations. Worse, it may recommend the wrong actions entirely.

But it’s not just about technical accuracy. It’s about contextual fidelity. AI must be trained on asset data that reflects operating conditions, service histories, failure modes, and usage profiles that actually matter in your environment—not generic global assumptions.

That means tying together master data with transactional data (work orders, condition monitoring reports, inspection findings), and ensuring that what gets captured reflects reality in the field. Inconsistent naming, missing metadata, or unstructured notes are kryptonite to AI—because they destroy the pattern clarity AI needs to function.

To unlock the full value of AI, organizations must treat data governance as a frontline reliability function. That means building data standards, validating inputs, enforcing data discipline at the point of entry, and continually cleaning and enriching the dataset as part of normal operations—not just as a one-off project.

Because in asset management, the real magic of AI isn’t the algorithm—it’s the quality of the questions it can answer, and that’s only as good as the quality of the data it sees.

AI Can Inform Asset Strategy, But It Can’t Write It

AI absolutely has a role to play in asset management strategy—but it’s a supporting role, not the lead. It’s brilliant at pattern recognition. Feed it enough clean, contextualized data, and it can help uncover correlations between condition indicators, failure events, maintenance history, and even operator behavior.

It can cluster assets by performance characteristics, flag outliers, and offer early warning signals. That’s powerful, but raw pattern detection isn’t a strategy. Strategy demands understanding. It requires knowledge of failure modes, asset criticality, environmental conditions, and the business context in which those assets operate.

AI doesn’t know whether a high vibration reading is due to misalignment, unbalance, or just a bad transducer—and it certainly doesn’t know which failure modes matter most to your operation.

That’s where human expertise comes in. Crafting an asset strategy means making tradeoffs—between reliability and cost, risk and return, uptime and investment. AI can model scenarios, but it can’t weigh stakeholder priorities, interpret subtle process dynamics, or manage organizational change.

And let’s be honest: AI won’t call out poor lubrication practices or highlight the cultural barriers to executing a condition-based strategy. That takes an expert eye. So yes, bring AI to the table. Let it surface insights and speed up analysis. But don’t let it write the playbook. That’s still the job of a seasoned professional who understands the physical realities of assets, the economics of maintenance, and the human systems required to make strategy stick.

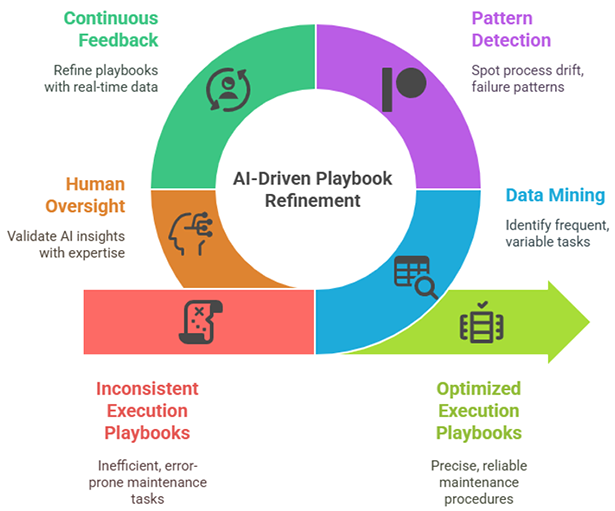

Using AI to Build Better Execution Playbooks—But Not Without Human Oversight

In the domain of physical asset management, execution playbooks are the connective tissue between strategy and reality. They define the standard work: the step-by-step guidance, tools required, torque specs, safety checks, condition monitoring triggers, and job sequencing needed to execute maintenance and operational tasks with precision. And this is where AI offers real promise—not as the author of the playbook, but as a tireless research assistant, pattern detector, and continuous feedback engine.

AI’s first contribution is surfacing standardizable work from noisy historical data. By mining thousands of completed work orders, AI can identify which tasks occur most frequently, where variation exists in execution time or outcome, and where corrective work tends to follow poorly executed preventive jobs.

It can spot process drift—such as changes in torque readings or task durations—and flag assets where planned maintenance consistently fails to prevent breakdowns. With human guidance, AI can cluster jobs by asset type, failure mode, and risk, helping asset managers prioritize which tasks should be codified into formal playbooks first.

AI is a scalpel. You still need the surgeon.

AI also supports the continuous refinement of playbooks. By ingesting real-time execution data—completion timestamps, sensor readings, condition monitoring reports, and technician notes—it can help assess whether jobs are being performed to plan and whether the plan itself is still fit for purpose. This is especially powerful when paired with mobile execution platforms.

AI can analyze the delta between planned and actual task time, flag outliers, and recommend adjustments to labor estimates, pre-work steps, or inspection points. Over time, this builds a feedback loop where the playbook isn’t just static documentation—it becomes a living document informed by field data and refined through machine learning.

But here’s the catch: AI still needs a domain expert at the helm. It doesn’t understand why a gearbox needs to be warmed before oil is sampled, or that torque sequence matters for flange integrity. It doesn’t recognize when “check for abnormal noise” is too vague to be useful, or that “hand-tighten” varies wildly between individuals.

AI can suggest where to look, but only experienced professionals can validate what good looks like. The best playbooks still require FLAB thinking—fasteners torqued to spec, lubrication executed precisely, alignment verified, and balance confirmed—all backed by human experience.

So, yes, use AI to accelerate playbook development. Let it do the heavy lifting of data mining, variation analysis, and performance feedback. But don’t delegate authorship. The best execution playbooks are written by those who’ve done the work, felt the vibration, smelled the burnt oil, and understand the consequences of getting it wrong. AI is a scalpel. You still need the surgeon.

Figure 2. Employ AI to augment human expertise to create consistent implementation playbooks.

Condition Monitoring: The Pulse Check AI Can’t Miss—But Shouldn’t Interpret Alone

Condition monitoring (CM) is one of the most powerful tools we have for proactive maintenance—and AI has the potential to supercharge its effectiveness. But let’s be clear: AI doesn’t know what a bearing sounds like when it’s just beginning to pit. It doesn’t feel the heat from a motor running just a bit too warm. CM is about sensing the earliest whispers of failure—and while AI can detect those whispers faster and at greater scale, it still takes expert ears to interpret the language.

AI excels at recognizing subtle trends across large datasets. It can analyze terabytes of vibration data, thermal imagery, ultrasonic feedback, electrical signatures, and process variables to detect patterns the human eye would miss. For example, it can correlate a minor uptick in harmonics with a slight decrease in pump efficiency and flag it weeks before failure becomes obvious.

With enough labeled data, AI can even suggest likely causes. But here’s the rub: if the dataset is dirty, mislabeled, or lacks failure context, the model will hallucinate. It’ll treat noise as signal and signal as noise. That’s not just unhelpful—it’s dangerous.

AI can listen. But it takes a human to truly hear.

What CM really needs is contextualization. AI can say, “this motor’s signature looks like one that failed before,” but it won’t know that this one runs in a salt-laden, high-humidity environment and hasn’t been realigned since installation. AI won’t catch that a thermal anomaly on a drive end bearing correlates with a known misalignment issue post-rebuild. That’s where the human comes in—someone who understands FLAB, understands the failure modes, and understands how machines behave when stressed.

The ideal model is hybrid intelligence—AI watches everything, flags what matters, and feeds it to experts for validation and action. It becomes a force multiplier for your condition monitoring team. AI helps prioritize where to look, accelerates anomaly detection, and builds trend baselines faster.

But don’t hand it the keys to the car. Make it your apprentice, not your advisor. In the end, reliable condition monitoring still demands a technician’s experience, an analyst’s discernment, and an engineer’s judgment. AI can listen. But it takes a human to truly hear.

Case Study: When AI Got It Right—Because Experts Framed the Problem

At a mining site operating Caterpillar 793D haul trucks, Razor Labs applied its predictive AI platform. Early on, the system flagged multiple anomalies—many of which stemmed from normal operational changes: steep inclines, sharp turns, or overspeed conditions.

Left unchecked, these false positives could have led to unnecessary interventions and operator frustration. But once domain experts helped reframe the inputs—focusing the AI on steady-state conditions and excluding known transient anomalies—the system improved dramatically.

Weeks later, the AI detected a subtle but persistent drop in oil pressure. A developing oil pump issue was identified and addressed before failure, saving time, cost, and risk.

The AI didn’t detect failure. It detected deviation. The expert diagnosed it.

AI as a Platform for Skills Development and Standardized Work

While AI absolutely requires expert oversight, it also delivers one of the most transformative opportunities in decades to scale expertise and institutionalize standardized work—two long-standing Achilles’ heels in physical asset management.

In a world where experienced tradespeople are retiring faster than we can replace them, and where tribal knowledge too often vanishes into thin air, AI offers a way to embed best practices into the work itself, making high-quality execution less dependent on who’s holding the wrench.

AI-powered platforms can bridge the skills gap by linking real-time asset data—vibration anomalies, thermal irregularities, or pressure deviations—to instructional content. That might mean a short video on how to retorque a flange to spec, a visual overlay of a lubrication route, or a voice-guided walkthrough of a diagnostic flowchart.

These just-in-time microlearnings turn AI into a digital mentor, surfacing the right knowledge, at the right moment, in the right format—especially critical for FLAB-intensive tasks like ensuring correct bolt sequence, verifying shaft alignment tolerances, or executing a run-in oil flush.

But what makes this powerful isn’t the flash—it’s the repeatability. AI enables us to convert tribal knowledge into structured, scenario-based guidance that technicians can follow again and again, reducing variability and elevating the floor of performance across the workforce. It transforms execution from memory-based to playbook-driven.

Work instructions can be dynamically adjusted based on asset condition, environment, and skill level, allowing for adaptive guidance while preserving procedural integrity.

Still, this only works if seasoned professionals build the foundational content. AI can deliver knowledge, but it can’t define what “good” looks like. It can recommend torque values—but only if the values were validated by engineers who understand joint integrity.

It can walk a technician through a standard lube route—but only if that route was designed with precision intervals, the right grease types, and proper contamination controls in mind. In short, AI can distribute wisdom, but it can’t originate it. And that’s why—once again—it takes an expert eye to create the playbook before AI can help enforce it.

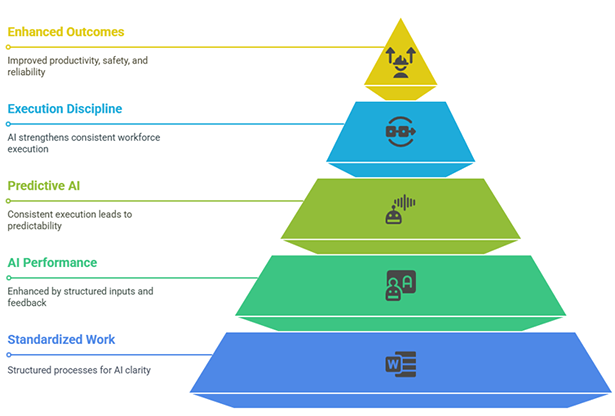

Enabling the Discipline of Standardized Work

AI doesn’t just benefit from standardized work—it depends on it. The discipline of standardization is what gives AI the signal clarity it needs to deliver meaningful insights and consistent support. Without structured, repeatable processes, AI becomes a pattern-matching engine without patterns.

But when work is standardized—when every job is executed according to a clear plan with defined outcomes—AI can become a powerful force multiplier for precision, productivity, and continuous improvement.

To operate effectively, AI requires a foundation of clean, structured inputs. That means:

- Standard job plans that include detailed task lists, job risk assessments (JRAs), bills of materials (BOMs), required tools, torque specs, and built-in quality checks.

- Defined inspection criteria and clear condition-based triggers for intervention—so anomalies are actionable, not ambiguous.

- Closed-loop feedback mechanisms that capture what actually happened in the field—task duration, failure resolution, deviations from plan—and feed those insights back into the model to refine its recommendations over time.

This creates a symbiotic flywheel: standardization improves AI performance, and AI strengthens standardization. The more consistent the execution, the more predictive the AI. The more predictive the AI, the easier it becomes to maintain execution discipline—even across large, distributed workforces.

This virtuous cycle enhances not just productivity, but predictability, safety, and asset reliability. It’s not automation for its own sake—it’s intelligent enablement, grounded in precision maintenance and governed by the playbook.

Figure 3. AI augments humans to improve the quality, effectiveness, and efficiency of work execution.

The MRO Opportunity: Beyond Failure Prediction

AI’s role in asset management doesn’t end with failure prediction—it extends deep into the heart of materials and MRO strategy, where inefficiencies often hide in plain sight. A well-deployed AI can unlock massive value by bringing visibility, intelligence, and foresight to storeroom operations—if, and only if, it’s grounded in expert-designed MRO frameworks.

Good parts management is asset reliability by another name.

AI can analyze historical and real-time consumption patterns across asset classes, shifts, and geographic locations to optimize inventory levels—reducing carrying costs without increasing stockouts. It can recommend substitute components based on reliability history, delivery lead times, or sourcing risk, helping planners make informed decisions when original parts aren’t immediately available.

AI can flag potential counterfeit or non-spec components by analyzing supplier performance, traceability data, and specification deviations before the wrong part hits the floor. And by linking work orders with actual material usage, AI can detect chronic overuse or underuse of parts, lubricants, and consumables—often exposing underlying issues in work execution or BOM accuracy.

But here’s the caveat: AI can’t build an MRO strategy. It doesn’t understand the difference between a critical spare and a nuisance part, nor does it grasp the procurement risks tied to geopolitical constraints, supplier reliability, or cost-of-failure considerations.

That still requires human expertise—people who understand lifecycle cost, lead time buffers, and the true consequences of downtime. AI can make your storeroom smarter, more responsive, and more aligned with operational needs—but only if it’s tethered to a sound MRO strategy, built by professionals who know that good parts management is asset reliability by another name.

Conclusion: Augment, Don’t Abdicate

AI is not your new maintenance planner, technician, or engineer. It’s a powerful assistant—one that gets stronger when paired with the expert eye. It can amplify pattern recognition, accelerate skills transfer, drive standardization, and improve MRO decision-making.

But in the end, interpretation is a human act. And in physical asset management, the cost of misinterpretation isn’t just inefficiency—it’s downtime, safety risk, and lost production.

Let AI crunch the numbers. Let your experts make the decisions.

That’s the future of smart maintenance.

References

- Razor Labs. (2024). Revolutionizing Mining Operations with AI Predictive Maintenance. IMARC 2024. https://razor-labs.com/revolutionizing-mining-operations-with-ai-preidictive-maintenance-imarc2024

- (2023). From Downtime to Uptime: How AI Predictive Maintenance is Rewriting the Rules of Manufacturing. https://smartdev.com/from-downtime-to-uptime-how-ai-predictive-maintenance-is-rewriting-the-rules-of-manufacturing